Test automation streamlines repetitive tasks, accelerates testing processes, and ensures consistent, reliable results in software testing. Reporting on test automation provides actionable insights and visibility into the test outcomes, enabling teams to make informed decisions, identify issues promptly, and enhance overall software quality efficiently.

What is a test automation report?

A test automation report is a comprehensive document that details the execution and results of automated test cases. It showcases various metrics, including test coverage, pass/fail statuses, error logs, and performance indicators.

This report offers visibility into the health of the software, pinpoints areas for improvement, and enables informed decision-making for optimizing testing strategies and enhancing software quality.

Why is it important to report on test automation?

Reporting on test automation offers clear visibility into test execution and provides stakeholders with a comprehensive view of test coverage. This data aids informed decision-making and fosters effective communication, enabling teams to prioritize improvements and continuously enhance their testing strategies. Ultimately, it optimizes testing efforts by directing focus, saving time, and maximizing impact.

Let’s consider a scenario:

Imagine a team working on an e-commerce platform. They’ve implemented automated tests to ensure that every time a user makes a purchase, the payment processing works seamlessly across various devices and browsers.

Using a test management tool like TestRail, the team sets up these automated tests to run regularly. Once the tests are executed, TestRail generates a detailed report. This report includes information such as the number of successful transactions, any failed payments, the devices or browsers where issues occurred, and the specific steps that led to failures.

The report might highlight that a payment fails consistently on mobile devices using Safari.

With this information, the team can dive deeper into the issue, identify that the root cause is an incompatibility between the payment gateway and Safari on mobile, and prioritize fixing this issue.

A platform like TestRail also allows them to track these issues, assign tasks to team members, and monitor progress. As they resolve issues, they rerun the tests and track improvements over time using TestRail’s historical data and test case versioning features. This iterative process ensures the payment system becomes more reliable across devices and browsers.

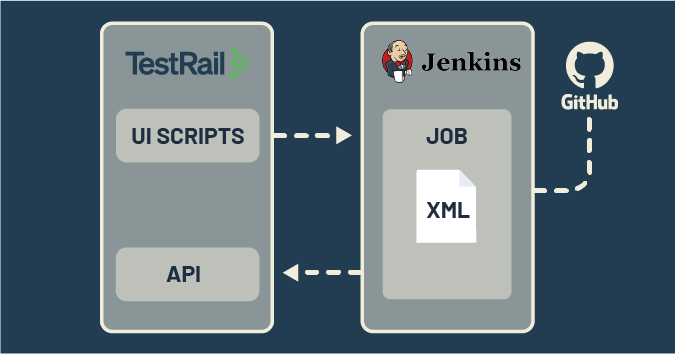

Image: TestRail’s integration with automated tests provides real-time, actionable insights and empowers teams to identify, address, and track issues efficiently.

Types of test automation reports

Test automation reports are the backbone of informed decision-making in software testing. They provide a comprehensive overview of test results, enabling teams to understand the testing landscape and make data-driven improvements.

Here’s a table outlining types of test automation reports commonly used in software testing:

| Report Type | Purpose | Additional Info |

| Summary reports | Provide an overview of test execution | High-level insights into test outcomes: tests run, passed, failed |

| Detailed test case reports | Present specific details about individual test cases | Status (pass/fail), execution time, associated issues/errors |

| Coverage reports | Show code/functionality coverage by tests | Metrics like code coverage, requirement coverage, or specified criteria |

| Trend analysis reports | Display trends over time in test results | Track progress, identify patterns, gauge improvements or regressions in software quality |

| Failure analysis reports | Focus on failed test cases and their details | Detailed information on reasons for failure, error logs, screenshots for debugging |

| Execution history reports | Showcase historical test run data | Past executions, outcomes, changes/trends in test performance over time |

These reports serve distinct purposes in evaluating different aspects of the testing process, aiding teams in making informed decisions to enhance software quality and efficiency.

Image: TestRail allows you to make data-driven decisions faster with test analytics and reports that give you the full picture of your quality operations.

Key features to look for in a test automation report

To create a thorough report for test automation, it’s important to assess key features. When evaluating a reporting tool, these are the important things to consider:

1. Accessibility

Accessibility is a priority in ensuring the availability of test automation reports to all stakeholders. A tool with robust accessibility features ensures ready access for team members, developers, and other parties.

Considerations:

- Online accessibility: Reports should be easily accessible, allowing team members to view them without needing specific software installations.

- Integration with CI/CD: Seamless integration with continuous integration and continuous deployment (CI/CD) pipelines ensures automatic accessibility after each test run.

2. Readability

Readability is a fundamental characteristic of effective test automation reporting. A clear and concise presentation of information ensures that stakeholders can understand the status of test cases and identify areas that need attention.

Considerations:

- Clear formatting: The report should use clear formatting, including headings, bullet points, and tables, to enhance readability. The incorporation of charts and graphs can make complex data more digestible.

3. Data-Rich

A data-rich report provides detailed insights into the test execution process. Comprehensive data allows teams to identify patterns, trends, and potential issues, contributing to more informed decision-making.

Considerations:

- Execution time: Information about the time taken for each test and the test suite execution time is essential for performance analysis.

4. Organized

A well-organized report structure is crucial for effective communication within a team. It helps people find what they need and prevents them from feeling overwhelmed by the presented information.

Considerations:

- Logical sections: Divide the report into logical sections, including a summary, detailed results, and historical trends.

- Hierarchical view: The tool should provide a hierarchical view of test suites, test cases, and their dependencies.

5. Provides a clear big picture

Detailed results are essential, but a clear testing status is also crucial. A high-level summary helps stakeholders grasp the project’s health without delving into minute details.

Considerations:

- Summary dashboard: Incorporation of a dashboard or summary section providing an overview of key metrics.

- Trend analysis: Historical data and trend analysis features contribute to understanding the project’s progress over time.

Image: In TestRail, you can monitor the progress of all your testing activities in one place, from manual exploratory tests to automated regression tests and everything in between.

Test reporting tools

A test reporting tool is a software application or framework designed to generate, organize, and present information about the results of automated tests. It compiles data from test executions and transforms it into understandable reports, offering insights into the health and effectiveness of the testing process.

Benefits of test reporting tools

Test reporting tools provide clear insights into test outcomes, aiding informed decision-making and optimizing testing efforts by identifying trends and maximizing resource impact for continual software quality enhancement.

- Visibility: Offer clear insights into test outcomes and execution details.

- Informed decision-making: Aid in making informed decisions based on comprehensive test data.

- Communication: Facilitate effective communication among stakeholders regarding software quality.

- Optimization: Help optimize testing efforts by identifying trends and areas for improvement.

- Resource maximization: Maximize the impact of testing resources for enhanced software quality.

- Efficiency: Streamline testing processes, reducing manual effort and potential errors.

- Continuous improvement: Drive continual enhancement in software quality through actionable insights and iterative improvements.

How to choose the right test reporting tool

Here are the top five considerations when choosing a test reporting tool:

- Alignment with project needs: Assess whether the tool aligns with the specific reporting requirements of your project, including the types of reports needed, level of detail, and customization options.

- Integration capabilities: Ensure the tool seamlessly integrates with your existing test automation frameworks, continuous integration/deployment (CI/CD) pipelines, and other tools used in the development lifecycle.

- Usability and accessibility: Opt for a user-friendly tool, offering an intuitive interface for easy access and understanding of reports by team members with varying technical expertise.

- Scalability and flexibility: Consider the tool’s ability to scale with your project’s growth and complexities, providing flexibility to adapt to changing testing needs or expanding test suites.

- Support and updates: Evaluate the tool’s support system, availability of updates, and responsiveness to issues or feature requests to ensure a smooth experience and quick resolution of any concerns.

Check out TestRail Academy’s Reports & Analytics course to learn more about how TestRail can help you visualize test data in real time and how to use report templates to check test coverage and traceability.

Popular Test Reporting Tools

Here’s a table summarizing some of the most popular test reporting tools:

| Test Reporting Tool | Description |

| TestRail | A popular test management platform with an intuitive interface and customizable reports. It integrates with various testing frameworks, providing flexibility in generating tailored reports. |

| Extent Reports | Offers reports available in HTML, PDF, and email formats. It summarizes test execution details, aiding in result analysis. |

| Allure Reports | Provides reports with graphs and charts, supporting multiple programming languages and frameworks for clear result representation. |

| JUnit | A widely used Java testing framework equipped with built-in reporting features.JUnit generates XML-based reports which are then integrated into build processes. |

| Mochawesome | Specifically designed for Mocha, a JavaScript test framework. Mochawesome generates HTML reports, focusing on simplicity and aesthetics in presenting test results. |

| TestNG | A Java testing framework offering basic HTML/XML-based reports. Its compatibility with various plugins enhances reporting capabilities based on project needs. |

Key test automation reporting metrics

Reporting metrics offer quantifiable insights into testing processes and progress, the effectiveness of testing efforts, areas for improvement, and ultimately, enable informed decision-making.

Here’s a list of ten key test automation reporting metrics commonly used in software testing:

| Metric | Description |

| Test coverage | Qualitative measure of functional and non-functional areas of a given system under test that are evaluated by a testing framework. This may include user modules, specific areas of the user interface, API, etc. |

| Pass/Fail Rates | Indicates the percentage of test cases passing or failing during test execution |

| Execution time | Measures the time taken to execute test suites or individual test cases |

| Defect density | Calculates the number of defects found per unit of code or test cases, reflecting a quantitative perspective of software quality of a given system under test |

| Test case success rate | Measures the percentage of successful test cases out of the total executed, highlighting test reliability |

| Code complexity | Assesses the complexity of code tested, impacting the likelihood of defects |

| Regression test effectiveness | Evaluates the ability of regression tests to identify new defects or issues |

| Error/failure distribution | Identifies the frequency and distribution of test failures across defined components/modules within a given system under test |

| Test maintenance effort | Quantifies the effort required to maintain test suites, impacting testing efficiency and reliability over time |

| Requirements coverage | Measures the percentage of requirements covered by test cases. This ensures traceability of the implemented features against validation/testing being executed |

Test automation reporting is pivotal in software testing. These detailed reports drive informed decisions, streamline communication, and improve software quality. Check out TestRail today and choose the right reporting tool that ensures an agile, quality-focused development process backed by data-driven insights.